Advice for building impactful data products

Countless data posts out there will tell you to do things like “harness the cloud” or “run experiments.” The vagueness of these posts is not helpful. You can’t “tip and trick” your way to a successful data product. You have to have the right mindset. I got frustrated reading these posts and decided to write my own, but one that’s not presented as collection of tips, tricks, or rules, but as guidelines. Following all of these doesn’t guarantee success, but they might be useful for you…

What follows is a collection of things I have recently observed at client meetings and also during project work. This post is inspired by an excellent article by Martin Goodson, “Ten Ways Your Data Project is Going to Fail” and includes my personal views on many things I currently see in data projects (That’s one problem right there … thinking in terms of projects instead of products.)

1. Think simple first and then, if it’s really needed, get more complex

Since AlphaGo beat one of the top Go players last year, artificial intelligence (a.k.a. deep learning) is a hot topic. Today, if you don’t do AI, you are not one of the cool kids. Too many clients ask me how they can use AI to do cool stuff. The problem is that in most cases a simple model is enough. Don’t overthink it.

2. Define your data product MVP and release as early as possible

Establish a working end-to-end pipeline early for your data minimum viable product and deploy it. Only data science models that are in production generate real value even if it is not perfect, which makes sense — so do yourself a favor and create an API first culture. Many data science teams still focus too much on improving their models instead of having a big picture of the problem. That big picture will become clearer if you deploy early. And you know what, collecting more data will help to improve your model for free. 😉

3. Establish your target architecture and workload while you go

Many clients ask me about the optimal sizing of the required data environment upfront. They want to know, for example, if 1.5TB memory is enough for their Spark cluster, before we even get to work on the problem. I understand they need this for budgeting, but my approach is iterative. I will get a better understanding of what I need while working on the problem. All together now: “Things will change.”

4. Use the right tool for the right problem

Is there anything worse than walking into a heated debate over what tool is better than another? This is typical whether it’s R vs. Python vs. SAS vs. Spark vs. Dask vs. Flink vs. Kafka vs. RabbitMQ vs. Redis vs. Geode vs. TensorFlow vs. Theano vs. Torch and so on… But here’s the rub: there isn’t a single tool that can solve every problem. My answer to this is: always work with the tool that best solves your problem. Tools come and go, so don’t become too infatuated with any particular one. (Particularly, I’m looking at you SAS.)

5. Build things that are meaningful

Sometimes I work with clients that want to build features that don’t make any sense. You don’t need to use word2vec if you are analyzing sensor data! So do your user research before you really invest a lot of money and time in building a given feature. If it doesn’t affect or inform the product’s goals, don’t build it.

6. Prioritize the projects with the biggest business impact

Let’s say you have this huge digital/data/big data transformation project with, say, thousands of projects going on at the same time. Where do you focus? You have to define what’s important and validate that assumption before you dive into building data tools and reporting to support a project. Data takes a ton of time to work on, and it needs to be brought on methodically, not on a whim.

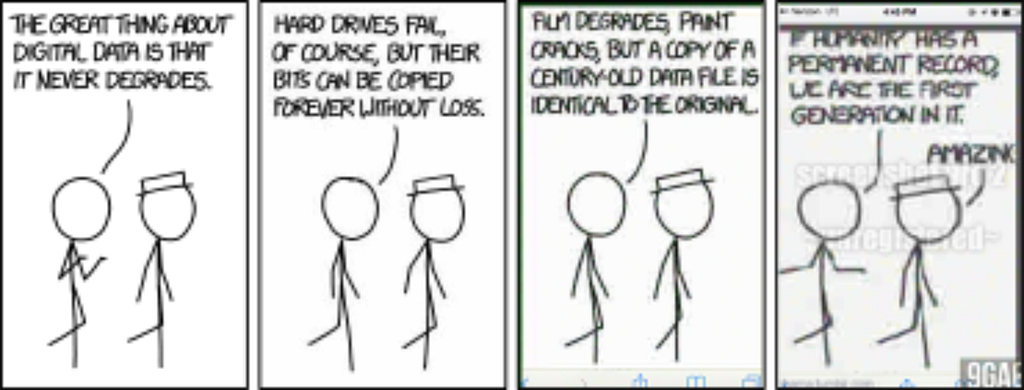

7. Measure your model and improve it from time to time

There is a saying, “Don’t change a running system.” But if you stick to this, then the system may run away from you. I had an engagement where a model was running for 3+ years and then the client realized that the results it produced didn’t match the problem anymore. That’s the problem with data. It changes. And this is especially problematic if you do “select all” in your code. 😉

So, recheck your model regularly, you’ll thank me later. A test-driven development culture can also help to mitigate this issue.

8. Communication + collaboration = 🔑

A data science team I recently talked to told me they have problems getting meaningful work within the company. The problem was that their CIO liked the idea of “big data” but established the data science team as a separate initiative, instead of being embedded into the business. They’ve been isolated for two years now. I told them to implement pair programming to break down barriers between different teams. Working in a balanced team will also help a lot.

Here are some useful pieces I’ve read that help me figure out how to approach a data project/product:

- Ten Ways Your Data Project is Going to Fail — Martin Goodson

- Data Transformation is the New Digital Transformation — James Governor

- What Can Data Scientist Learn from DevOps? — Donnie Berkholz

- Machine Learning for Product Managers — Neal Lathia

- Rules of Machine Learning: Best Practices for ML Engineering — Martin Zinkevich

- Data Science in the Balanced Team — Ian Huston

- 7 Super Simple Steps From Idea To Successful Data Science Project — Andreas Kretz

- What is Hardcore Data Science — In Practice? — Mikio Braun

- The Next Generation of Data Products? — Hilary Mason

Last but not least, fellow data folks, tell me:

What are some guidelines you use to ensure a successful project?

Change is the only constant, so individuals, institutions, and businesses must be Built to Adapt. At Pivotal, we believe change should be expected, embraced and incorporated continuously through development and innovation, because good software is never finished.

8 “Simple” Guidelines For Data Projects was originally published in Built to Adapt on Medium, where people are continuing the conversation by highlighting and responding to this story.

About the Author

Follow on Twitter Follow on Linkedin Visit Website